In a world in which AI systems increasingly affect our lives, we need a new culture of technological design. A critical discussion on the design choices in these technologies and their political charge is more urgent than ever.

In 1980, the philosopher of technology Langdon Winner argued that artifacts embody politics, by which he meant that not only humans but also technical things have political qualities. In his paper “Do Artifacts Have Politics?” he extensively discussed “racist overpasses” and “labor-stealing tomato harvesting machines.” Artifacts concretize specific forms of power, either intentionally, as in the case of Robert Moses’s overpasses deliberately designed in the 1930s to make impossible public transportation and so racial minorities’ access to some recreational parks, or unintentionally, as when the introduction of mechanical harvesters in the 1940s reshaped the social relationships of tomato production in California.

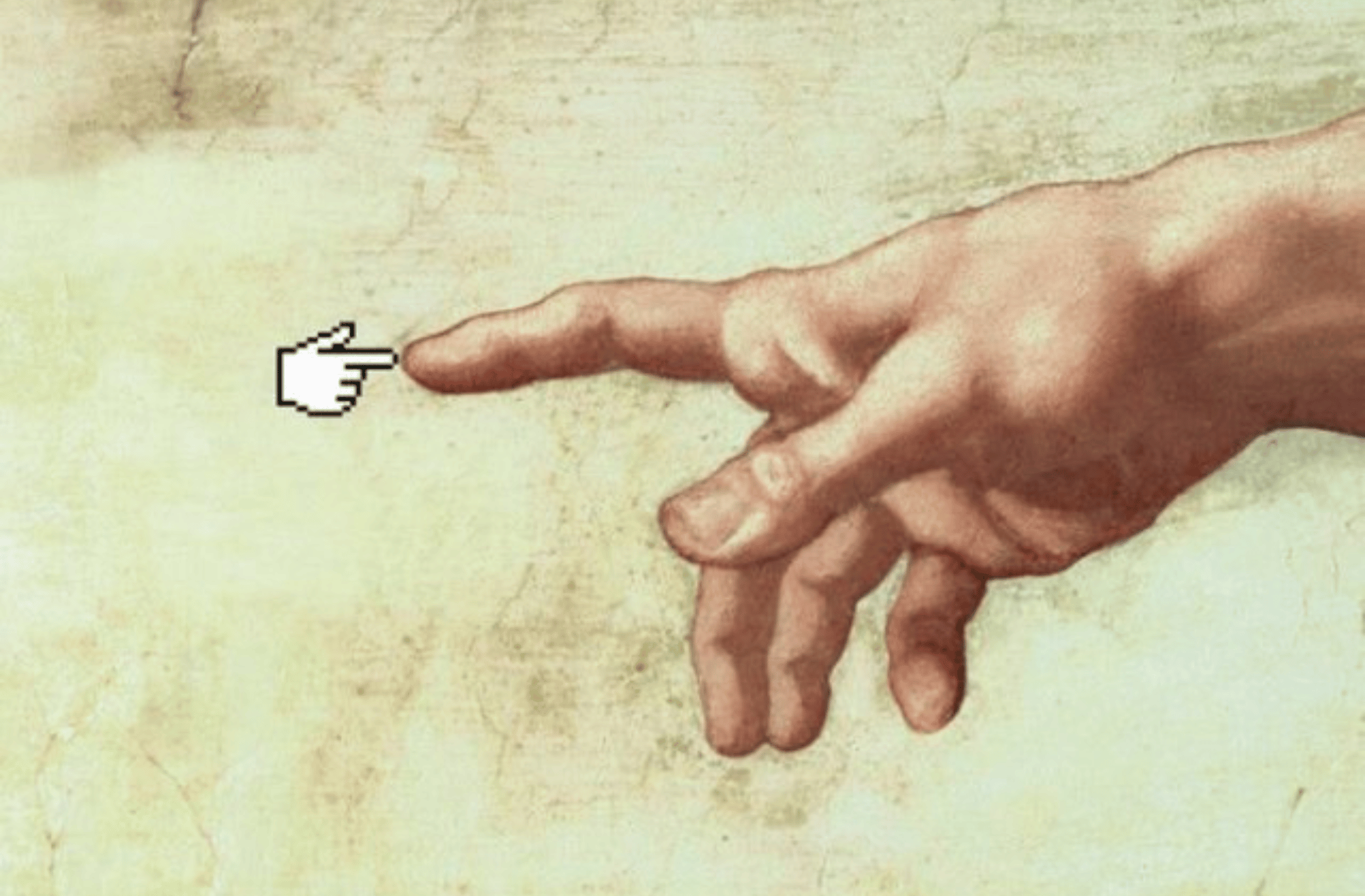

Today a special kind of artifact has taken center stage in the public debate: artificial intelligence (AI) systems. They have a strong political charge, in intentional and unintentional ways. Think, for example, of the recommender systems embedded in large online platforms, such as social networks, and their impact at the individual and collective levels. Yet, the public debate is mostly dominated by controversies between those raising concerns about the catastrophic risks of AI and those persuaded that it will solve any problem. Instead, a more concrete and urgent risk concerns the political qualities present at the beginning, at the center, and at the end of the AI design processes. The point here is not that the problem of how AI systems are designed, by whom, for which goals, and in which context is the only or the most important one, or that every problem with AI (from bias and discrimination to privacy and responsibility) can be solved with good design. Rather, it is that we should devote more attention to the power of design and, accordingly, to how some design choices can determine the moral and political connotations of these artifacts.

A key element in this is to rethink our current technological design culture. Technologies do not exist in a vacuum—they are shaped by the societies in which they are developed and, conversely, they shape them. This is particularly true for those technologies, such as AI, that are today deeply interwined into the fabric of society and shape the way we live: in how we conduct business, how we make and nourish friendships, how we see the world, how we decide on a purchase, and how we choose a movie, a song, or a romantic relationship. In many cases they perpetuate, and sometimes they reinforce, historical, racist, gender, or sexual-orientation biases.

It is tempting to go back to the case of Moses’s overpasses and suggest that the problem is only in the choice of the wrong (racist, in the case of Moses) values, as if any negative outcome could be solved by incorporating positive values into design. But things are more complicated and nuanced than they appear. To design with positive values and beneficial outputs in mind does not guarantee against unexpected and unintentional forms of technological mediation: the tomato-harvesting machine is a case at point. If it is essential to try to anticipate possible problems at the design level, it is also fundamental to learn how to imagine future possible consequences that can emerge when very complex, higly flexible, and, in many cases, still experimental technologies, such as AI, are inserted in their contexts of use.

So while acknowledging that designing is power, it is fundamental to rethink of this power accordingly. This means understanding not only how to design fairer, more inclusive, beneficial, and sustainable AI technologies. We also need to design them in more transparent and democratic ways that consider the broader socio-technical context in which they are embedded. This is a far from easy task for which there is no simple solution. Education, research, and public discussion are probably the most important pillars to start with. Education is vital to create a class of future designers of technologies aware of the moral, social, and political charge of the artifacts they create. Research is important to offer spaces of experimentation where different expertise and approaches can be integrated. Public discussion is crucial to promote a common ground for alternative views.

Around the world there are already several promising efforts that need to be cultivated further. The Digital Humanism movement founded in Vienna in 2019 is one of them. The Vienna Manifesto lays out its motivation and goals, and it calls to action and invites academic communities, industrial leaders, politicians, policymakers, and professional societies to promote the design of information technologies in accordance with human needs and values. This and similar initiatives are essential for the definition of an ambitious agenda in the direction of a new culture of technological design. This agenda should focus on the scientific and the educational levels, but it should also include philosophical, ethical, and political elements. Building a future in which AI’s great power is shared among all for the benefit of humankind can only be achieved if we stop looking at AI as a merely technical problem and instead bring in other perspectives to design the kind of society we want to live in. Social impacts and negative consequences from the design of these technologies are not issues to be solved ex post—they must be addressed as part of the design process itself. Moreover, design choices need to be reconceptualized as political ones as well, and thus as requiring an open and wide debate on their possible outcomes.

To paraphrase Joseph Weizenbaum, the famous computer scientist and computer ethics pioneer, the relevant issues are not technical but ethical and political. However, good intentions are not enough when designing complex socio-technical systems, such as AI, that affect our present and future life at the individual and the collective levels. Scientists, engineers, and computer scientists equipped with an appreciation for humanities are far better prepared to evade the pitfalls of technocratic power. They will be better leaders in a changing world that needs to navigate the complex and interconnected challenges of AI development, climate change, and increasing social and economic inequalities. Many difficult issues remain open, but this is not an excuse to postpone this paradigmatic shift: a new design culture for technology should be the rule rather than the exception.

Winner, L. (1980), “Do artifacts have politics?”, Daedalus, 109, 121-136.

Vienna Manifesto on Digital Humanism (2019), https://caiml.org/dighum/dighum-manifesto/.

Viola Schiaffonati is associate professor of logic and philosophy of science at the Department of Electronics, Information and Bioengineering, Politecnico di Milano. In June 2024, she was the senior Digital Humanism Fellow at the IWM.